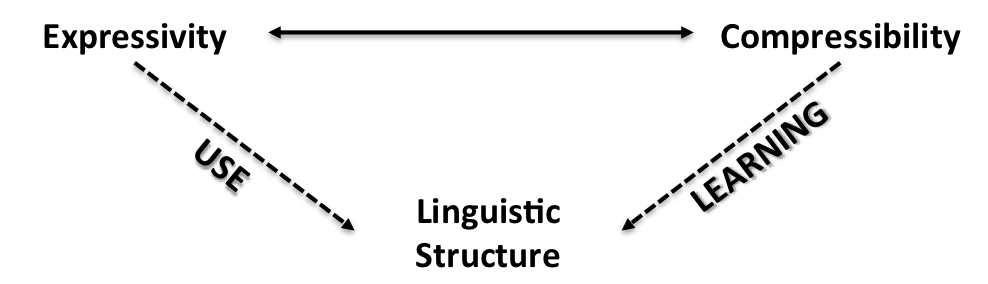

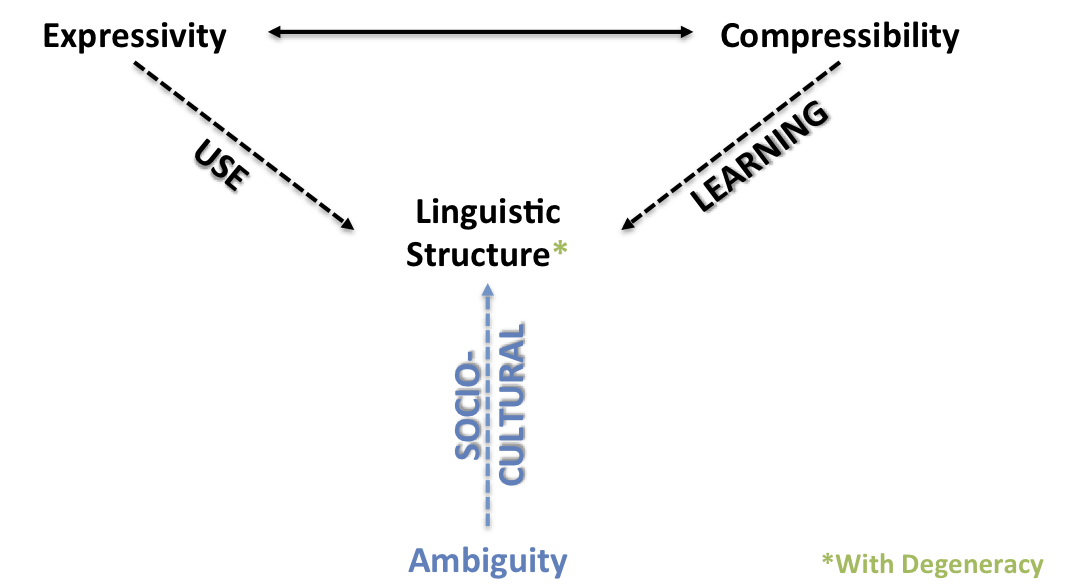

Two weeks ago my supervisor, Simon Kirby, gave a talk on some of the work that’s been going on in the LEC. Much of his talk focused on one of the key areas in language evolution research: the emergence of the basic design features that underpin language as a system of communication. He gave several examples of these design features, mostly drawn from the eminent linguist, Charles Hockett, before moving on to one of the main areas of focus over the past few years: compositionality (the ability for complex expressions to derive their meaning from the combined meaning of their parts; see Michael’s post and Sean’s post for some good previous coverage). Simon’s argument is that compositionality, as well as some other design features of language, emerge from two competing constraints: a pressure to be useful (expressivity) and a pressure to be learned (compressibility).

The general gist of the talk was that by varying the relative pressures of these two constraints we can evolve very different systems of communication. To get something approaching language we thus need to reach a balance between learning and use. First, naïve learning is required because it forces language to adapt to the learning bottleneck imposed by the maturational constraints on child learning. Still, even with this inter-generational learning pressure, language isn’t merely a passive task of remembering and reproducing a set of forms and meanings. Instead, we need to also account for usage dynamics: here, the system must display a capacity to be expressive, in so much that there is an ability for signals to differentiate between meanings within a language.

From Kirby, Cornish & Smith’s (2008) work we know that a language heavily biased towards maximally expressivity is very much like the initial generation of their experiments: there is an idiosyncratic set of one-form to one-meaning pairs without any systematic structure. It’s expressive because every possible meaning in the space has a label. By contrast, a stronger bias towards learnability results in highly compressible languages: that is, we see highly underspecified systems of communication, with the most extreme example being one-form to all-meanings. The result of balancing these two forces over Iterated Learning (henceforth, IL) is the emergence of compositionality: a learnable, yet highly structured communication system that is the result of a pressure to generalise over a set of novel stimuli.

Naïve Optimisation and Ambiguity

One of the perhaps under discussed consequences of the IL experiments is its adoption of naïve optimisation: in the tradeoff between expressivity and compressibility, the types of system that evolve are highly optimised, with one-to-one form-meaning pairs. Part of the problem with these systems is that they aren’t very robust to perturbations – that is, they are only stable within the very narrow confines of the experimental conditions. If we applied the same type of logic to, say, biology, then it would be inefficient, for instance, to maintain two lungs and two kidneys: redundancy is eschewed in favour of efficiency. And, as I’ll discuss below, we even get certain types of redundancy whereby the system actually benefits from perturbations (what Taleb describes as antifragile).

Now, don’t get me wrong: this is not a negative criticism of the IL experiments. After all, the whole point of performing experiments is to abstract away from noisy environments, and, more importantly, I think these experiments are definitely on the right track in telling an important part of the story for language evolution. Instead, what I’m trying to get at is that, far from playing a cameo role in this story, redundancy is a crucial component of language structure. Furthermore, as we already know from Kirby et al’s experiments, redundancy doesn’t necessarily derive from these two pressures of expressivity and compressibility — and certainly not in the emergence of compositionality (although we do get redundancy in some of the other conditions). Instead, I think there’s another claim to be made, and that there is a salient ambiguity pressure acting on language — and this, in combination with the other forces, gives rise to linguistic structure that’s (more) robust.

Ambiguity exists at many layers of language. You have lexical ambiguity, syntactic ambiguity, scope ambiguity and many other types (see here). Broadly conceived, then, ambiguity corresponds to any state in which a linguistic code contains forms that are conventionally associated with more than one meaning (Hoefler, 2009). So far, we’ve hinted that ambiguity pressures can be solved through naïve optimisation: via a competition dynamic ambiguous forms are simply removed from the system. It is an efficient way of resolving the problem by only allowing for one possible form for each meaning. As such, through increasing its expressivity and compressibility, a language is seen as minimising the amount of ambiguity present.

Yet, as we know, examples from real language show that both ambiguity and redundancy are abundant. Take, for example, the term port: this is a clear instance of lexical ambiguity in that it can either refer to a harbour or a fortified wine. In everyday language use, it’s very unlikely we will run into a situation where communication collapses due to ambiguity, largely because the system is not highly optimised. It is robust through relying on the use of multiple strategies for solving the same communicative problem (in itself, a form of redundancy). To take just two options to solving the port problem, we could either (a) choose an alternative form such as harbour or fortified wine; (b) use pragmatic inference and extra-contextual information e.g. I could say ‘this is a nice port’ whilst standing on a harbour holding a bottle of fortified wine and gesture towards the latter, through raising the glass or nodding my head, to reinforce my intended meaning.

As I’ve started to hint at above, an alternative solution to naïve optimisation, and one which is consistent with what we observe in language, might be found in a special type of redundancy known as degeneracy (see here and here for my previous posts). In a nutshell, I’m claiming that degeneracy emerges as a robust solution to ambiguity pressures, and should be considered a design feature of language. There are three claims I want to explore over the next couple of posts: (a) that ambiguity is an important pressure alongside expressivity and compressibility in the emergence of linguistic structure; (b) degeneracy is a design feature of language that is robustness-enhancing, creates a greater capacity to innovate in response to perturbations, and is concomitant with a rise in multi-scaled complexity; (c) social structure and cultural complexity play a fundamental role in increasing ambiguity pressures and degeneracy.

Degeneracy as a Design Solution to Ambiguity Pressures in Language

Defined as components that have a structure-to-function ratio of many-to-one, the unfortunately named concept of degeneracy is a well known characteristic of biological systems, and is found in the genetic code (many different nucleotide sequences encode a polypeptide) and immune responses (populations of antibodies and other antigen-recognition molecules can take on multiple functions) among many others (cf. Edelman & Gally, 2001). Believed to be a pre-condition for open-ended evolution, through facilitating the coexistence of “high robustness, growing complexity, and the continued propensity for innovation” (Whitacre, 2010: 1), degeneracy has more recently been appreciated as potentially having broader applications, with Mason (2010) offering a value-free, scientific definition:

Degeneracy is observed in a system if there are components that are structurally different (nonisomorphic) and functionally similar (isofunctional) with respect to context (Mason, 2010: 281).

Like biological systems, degeneracy is perhaps also a crucial characteristic of language, with it likely existing at multiple levels of organisation, ranging from individual phoneme segments underpinned by multiple phonetic tokens to different grammatical structures that perform similar meanings. We also see degeneracy existing as general property of communication systems – allowing for high levels of robustness through functionally redundant components in both multichannel and multimodal signals (Ay, Flack & Krakauer, 2007).

What I want to try and argue is that (a) degeneracy solves the ambiguity problem whilst also remaining expressive and compressible, and (b) degeneracy is advantageous over naïve optimisation because it offers a more robust and evolvable system.

One solution to the ambiguity problem, as suggested above, is to have multiple signals for the same or similar meaning. Yet, as we’re well aware, true synonymy in language is rare. Even words that at a first glance might seem synonymous, such as soda vs. pop, normally display some sort of differential patterning based on contextual factors governing word use (Altmann et al., 2010; see Rep Typo coverage here). As such, we can view these words as being degenerate, in that they are different structures with overlapping, context-dependent function(s). To avoid direct competition, then, these words enter into a synergistic relationship, whereby they become increasingly specialised to carve up distinct areas in the socio-communicative functional space without impinging upon each other. So, where they might share an overlap in terms of lexical-semantic function, these terms will be differentiated based on syntactic, pragmatic and/or social functions.

What we get as a result of degeneracy is functional interdependency between co-evolving signals. This is advantageous for several reasons. First, degeneracy enables system-wide increases in expressivity: if there’s functional interdependency between signals, then you can specify more of the functional space across all of the signals whilst lowering the expressivity of each individual signal. Second, each signal is robust to ambiguity by offloading onto structurally different but functionally similar signals: it decreases the chance of ambiguity arising between speaker and hearer as they can rely on more specialised signal forms. Lastly, linking these two points together, functional interdependency allows for greater compression of signals: each signal is able to encode less through specialisation and offloading onto contextually-dependent factors.

Take dog and hound: these two lexical items perform similar functions, and can be used interchangeably in some contexts, yet they have co-evolved to carve up their semantic space in a specialised manner, with dog referring to all possible forms of Canis lupus familiaris, whereas hound is now only used to denote a certain subset of dogs (although their etymology is quite interesting because in the 14th Century the basic term for the category was hound and dog referred to a specific breed). Together they are more expressive in that they are able to carve up the semantic space in a more specialised manner. In isolation, they are more compressed, in that their referential load is decreased: dog can only refer to the general set, and to disambiguate between breeds you need to provide more information (and vice versa for hound). Naturally, we could do this via the pragmatic context, in that there could be two dogs in the immediate vicinity, or we could specify further by describing the differences between the dogs, yet there are situations where having two distinct words is clearly advantageous.

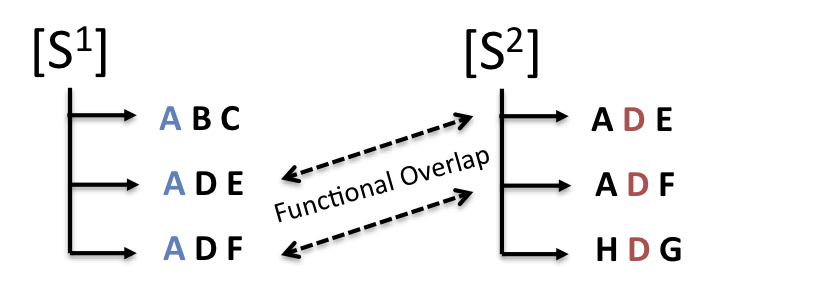

To drive the point home in a more abstract manner: say we have two signals that refer to several different combinations of features (see figure below). The first signal, S1, refers to anything with feature A, whereas the second signal, S2, refers to anything with feature D. Even though these two signals are not synonymous, in that they do not refer to exactly the same features, there is a substantial functional overlap in what they include as members.

First of all, allowing for distributed function across two dissimilar structures is robustness-enhancing: if one structure is deleted, then there is not a massive loss in function. Next, these signals are both more expressive together (they are able to carve up and encode more features in the functional-meaning space) and more compressible (individually they are able to encode less). Lastly, as they are context-specific, these signals are able to solve potential ambiguity problems: if you need to, say, disambiguate between [A B C] and [A D E] then you can use S1 and S2 respectively. There’s also some additional points you could make about how these signals, when forming a synergistic relationship, are also open to explore the functional space without massively perturbing the system – that is, it’s robust and evolvable.

In summary, there are three important points here: (1) co-evolving degenerate signals are able to carve up their functional space in a more specialised manner through affording each signal to explore the adjacent functional space without deleterious perturbations to the system; (2) By offloading onto context (i.e. the surrounding signals), an individual signal is able to encode less through distributing function across multiple dissimilar structures: that is, degeneracy and functional interdependency increases the compressibility of a signal by entering it into a synergistic dynamic and lowering what needs to be encoded (also see lossy compression algorithms); (3) Lastly, degeneracy emerges as a result of pressures stemming from ambiguity: it’s a robust solution that allows for the co-existence of functionally overlapping, context-dependent signals.

For my next post, I’ll flesh these ideas out a bit more clearly, as there’s a lot to grapple with in a single blog entry. To give you a brief taster: complex socio-cultural dynamics increase the probability of routine ambiguity problems and degeneracy evolves as a robust solution.

![]() Kirby, S., Cornish, H., & Smith, K. (2008). Cumulative cultural evolution in the laboratory: An experimental approach to the origins of structure in human language Proceedings of the National Academy of Sciences, 105 (31), 10681-10686 DOI: 10.1073/pnas.0707835105

Kirby, S., Cornish, H., & Smith, K. (2008). Cumulative cultural evolution in the laboratory: An experimental approach to the origins of structure in human language Proceedings of the National Academy of Sciences, 105 (31), 10681-10686 DOI: 10.1073/pnas.0707835105

Edelman, G., & Gally, J. (2001). Degeneracy and complexity in biological systems Proceedings of the National Academy of Sciences, 98 (24), 13763-13768 DOI: 10.1073/pnas.231499798

Ay, N., Flack, J., & Krakauer, D. (2007). Robustness and complexity co-constructed in multimodal signalling networks Philosophical Transactions of the Royal Society B: Biological Sciences, 362 (1479), 441-447 DOI: 10.1098/rstb.2006.1971

Eduardo G. Altmann, Janet B. Pierrehumbert, & Adilson E. Motter (2010). Niche as a determinant of word fate in online groups PLoS ONE 6(5), e19009 (2011) arXiv: 1009.3321v2

Whitacre, J. (2010). Degeneracy: a link between evolvability, robustness and complexity in biological systems Theoretical Biology and Medical Modelling, 7 (1) DOI: 10.1186/1742-4682-7-6

Mason, P. (2010). Degeneracy at Multiple Levels of Complexity Biological Theory, 5 (3), 277-288 DOI: 10.1162/BIOT_a_00041

3 thoughts on “Degeneracy emerges as a design feature in response to ambiguity pressures”