In January, Ghazanfar, Morrill & Kayser published a paper in PNAS entitled “Monkeys are perceptually tuned to facial expressions that exhibit a theta-like speech rhythm”. The abstract is below:

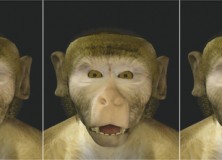

Human speech universally exhibits a 3- to 8-Hz rhythm, corresponding to the rate of syllable production, which is reflected in both the sound envelope and the visual mouth movements. Artificial perturbation of the speech rhythm outside the natural range reduces speech intelligibility, demonstrating a perceptual tuning to this frequency band. One theory posits that the mouth movements at the core of this speech rhythm evolved through modification of ancestral primate facial expressions. Recent evidence shows that one such communicative gesture in macaque monkeys, lip-smacking, has motor parallels with speech in its rhythmicity, its developmental trajectory, and the coordination of vocal tract structures. Whether monkeys also exhibit a perceptual tuning to the natural rhythms of lip-smacking is unknown. To investigate this, we tested rhesus monkeys in a preferential-looking procedure, measuring the time spent looking at each of two side-by-side computer-generated monkey avatars lip-smacking at natural versus sped-up or slowed-down rhythms. Monkeys showed an overall preference for the natural rhythm compared with the perturbed rhythms. This lends behavioral support for the hypothesis that perceptual processes in monkeys are similarly tuned to the natural frequencies of communication signals as they are in humans. Our data provide perceptual evidence for the theory that speech may have evolved from ancestral primate rhythmic facial expressions.

Writing in Nature, last week, Techumseh Fitch wrote a short news article on Ghazanfar’s findings including a very concise but clear outline on the two main hypotheses for the evolutionary origin of human speech, which he also goes over in his 2010 book. Namely, the hypothesis that speech is derived from primate vocalizations as the same vocal production system (lungs, larynx and vocal tract) is used to produce both primate calls and speech. However, as Fitch states, “a problem is that human speech is unique among primate vocalizations in being a very flexible, learned signal, whereas most primate calls have a strong, species-specific genetic determination. The ‘vocal origins’ hypothesis favours evolutionary continuity of vocal production over a hypothetical discontinuity in vocal control and vocal learning.”

The second hypothesis is MacNeilage’s hypothesis that speech rhythms originated not in the vocal, but in the visual domain. As the mouth generates not just vocal, but also visual, signals. The strength in this hypothesis lies in the fact that these articulators are under learned voluntary control in non-human primates. MacNeilage argues that speech develops in babies’ babbling as a lip-smacking behaviour superimposed on a vocal signal. Fitch states: “This rhythmic stream is then differentially modified, by learned tongue and lip movements, into the vowels and consonants of speech. Support for this hypothesis comes from previous work demonstrating that the detailed kinematics of lip-smacking are strikingly similar to those of speech. But Ghazanfar and colleagues’ work adds support from the domain of perception, indicating that perceptual tuning for the two signal classes is also consistent with MacNeilage’s hypothesis.”

As has been covered on this blog before, a lot of research on speech evolution has focused on the descended larynx. This new research adds to the body of work that suggest that anatomy might not be as important as first imagined, and that neural control and vocal learning may be much more important.