This year’s Nijmegen lectures were given by David Poeppel on his work linking language processing to low-level neural mechanisms. He called for more “muscular” linguists to step up and propose a “parts list” of linguistic primitives that neuro researchers could try and detect in the brain. In this post, I cover the generativist answer to this, as proposed by Norbert Hornstien, who appeared as a panelist at the Nijmegen lectures, and why it bothered me (TLDR: I think there’s more to language science than syntax, and other areas can also draw up a “parts list”).

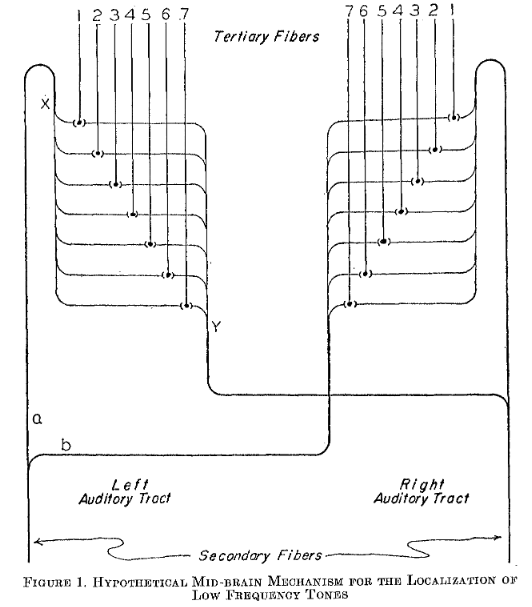

A particularly nice example of a link between theory and implementation in another field comes from the neural mechanisms behind sound localisation in owls. Owls can hone in on a sound, like the squeak of a mouse. A computational theory of what was required to do this (detecting the difference in timing of an incoming signal from two sources, and then converting that into instructions for movement towards the target) lead to a proposal for an algorithm which converted a difference in time to a difference in space. Initially, this algorithm was imagined as an electrical circuit. Amazingly, neuroscientists were able to find this very circuit implemented in neurons in the owl’s brain (see Ashida & Carr, 2011).

Poeppel hopes for a similar success story for language. He’s doubtful that we’ll be able to detect simple “areas” responsible for language processing, but he has shown in a series of experiments that there are acoustic regularities across languages, and brain oscillations track at least two levels of spoken language structure: syllables and higher-level “phrases”. Since syllables are widely held to be universally common in human languages, this is a good candidate for the parts list. Poeppel’s most recent work was covered, misleadingly, in some places as “Chomsky was right” and this is evidence for universal grammar in the brain, which Poeppel does not claim.

Which brings us to Hornstein’s contribution to the lectures. He gave a presentation on a kind of “guide to linguistics for neuroscientists”. He literally started his presentation with a slide saying that “Chomsky is always right”, and went on to provide a “parts list” drawn form Generativist theory, which he claimed was completely uncontroversial.

The central feature that he focused on was the unbounded recursive merge operation (basically the ability to take two things and make them one thing that can be put with another thing etc.). When he was asked what kind of mechanism in the brain could support merge, he suggested that if neuroscientists could find an implementation of a computational stack (a way of storing information in an ordered way), then the rest of the mechanisms of generative theory could be extrapolated from that.

This might be true, but is not really very helpful to neuroscientists. In analogy to the owl example, what Poeppel is looking for is a theory of language which makes predictions about what kinds of neural mechanisms support language. Firstly, a stack is a computational-level concept, not an algorithmic concept, which would provide more clues about what to look for. Furthermore, while a computational stack would be very helpful for implementing merge, but it would also be helpful for implementing alternative models of grammar (e.g. construction grammar) or face recognition or playing chess. That is, in contrast to the claim that merge is the unique hallmark of language, a computational stack is extremely general (and as Poeppel noted, we currently have no viable theory of information storage in the brain). The owl example made specific predictions about neural architectures, which were distinct from many other possibilities (and other animals do use other mechanisms).

However, there is one unique feature of generative grammar that does make a specific prediction about a neural mechanism that other theories of language do not make: the unbounded nature of merge. I have never really understood the continual emphasis on the ability to create sentences undoubtedly long dependencies. I get that this is a property of the abstract computational theory, but even when addressing Neuroscientists, Hornstein kept returning to this feature, which would predict that real brains have some way of processing unboundedly large amounts of data. This, of course, can’t be the the case, and of course Hornstein would not make this claim. But that leaves us with a theory of generative grammar which does not make specific predictions about neural mechanisms, and which, taken literally, suggests looking for something that cannot be there.

This leads to my main problem with Hornstein’s talk, namely that it had a very narrow view of what language is. In line with recent papers from generative linguists, this view equates language with generative syntax, and suggests that nothing else is worth studying, or even amenable to scientific inquiry (see my previous rant). A recent paper by Everaert, Huybregts, Chomsky, Berwick & Bolhuis claims that “Language is neither speech/sign (externalized expression) nor communication (one of its many possible uses)”.

This dogmatic attitude also came across in Hornstein’s talk. To be fair, I agree with what Hornstein has expressed in other places, that generative linguists have a good notion of the kinds of syntactic patterns that exist in the world’s languages, and these patterns deserve an explanation (and are often ignored by evolutionary linguists outside of the generative program). But a lot of the argument was a mix of a kind of argument from authority (experts in grammar think that studying anything else is not worthwhile) and a “no true Scotsman” argument (all linguists agree on this, so if you don’t agree, you’re not a real linguist). While Hornstein’s presentation style is quite enjoyable and clearly a reflection of his character, rather than how he would express himself in a formal mode, I found that I was not engaged with the argument, and did not feel a desire to collaborate or integrate these ideas into my own work. I found the certainty weirdly unscientific.

To paraphrase an idea from a very different source, imagine that tomorrow a paper is published which shows that the concept of merge is correct: The paper finds a genetic change in human genomes that regulates brain growth which leads to the formation of neural circuits which implements a cognitive algorithm capable of recursive operations. This would be impressive science, and important news, but – for me at least – it wouldn’t really change anything I do day-to-day in my research. It wouldn’t address any of the questions I have about language, and it wouldn’t be particularly be helpful for helping me answer them (at least, not more so than developments in other areas like computation or speech recognition or archaeology).

That’s a long way of saying that the “parts list” for language should have a lot more on it than the bits that support syntax, including all the communicative and social aspects of language. To be clear – I’m not saying that syntax is not an important part of language, I’m just not very interested in it – but I still think I’m studying language.

One topic that I’ve been learning about recently is the rules of conversation as studied through conversation analysis. While this is often a qualitative field, and certainly outside the mainstream of linguistics, it works rigorously from real data to try to capture how conversation works. It’s possible to draw up a “parts list” for conversation, which would include things like taking turns at talking and sequential organisation (certain pragmatic actions are complemented by others, like questions followed by answers). A paper by Stephen Levinson published last month (pdf here) spells out how turn taking has implications for language processing and acquisition, and can be studied through methods from psycholinguistics, cognitive science, neuroimaging, cross-cultural comparison and cross-species comparison (other species take turns!). This wide range of approaches has recently been showcased in a special issue on turn taking. Indeed, one suggestion is that the regularity of turn taking could be supported by brain oscillations in a similar way to Poeppel’s suggestion for syllables. This parts list also has implications for theories of language evolution, which we’ll discuss in our workshop Language adapts to Interaction.

I’ve also been thinking about what “parts” could be added to the list from the field of cultural evolution. There are plenty of ideas for concepts that are essential to the transmission and maintenance of culture (e.g. Replicator/Interactor/Selection), but not all make predictions about neural mechanisms. One approach that might help here is to ask why humans use language. The role of language in maintaining social relationships and links with social cognition may provide clues for how the fields of cultural evolution and neuroscience can talk to each other. Then there are other aspects connected to acquisition and teaching, such as gaze following and pointing.

Anyway, here’s my message for neuroscientists: There’s more to language than syntax.

“a computational stack would be very helpful for implementing merge, but it would also be helpful for implementing alternative models of grammar (e.g. construction grammar) or face recognition or playing chess”.

I didn’t attend the lecture, but from your description it didn’t sound like Hornstein thought that finding a neural circuit in the brain that implemented a stack would be evidence for mainstream generative grammar over HPSG or construction grammar or whatever. If I understand correctly, Hornstein’s recommendation for neuroscientists was to try and find a neural circuit that implements “recursion”; all of those frameworks would presumably need to have some kind of way to implement that.

I’m not sure stacks would be that useful in face recognition. Chess is a bit of a weird example in this context, but sure, if we find good evidence that stacks are essential to human chess playing, it seems reasonable to try to correlate to depth of a stack at a given moment with neural activity.