As Niyogi & Berwick (2009) point out, there is a tendency in modelling of Linguistic Evolution to assume chains of single learners inheriting single grammars from single teachers. This is, of course, not realistic – we learn language from many people and people can speak more than one language. However, Niyogi & Berwick suggest deeper objections.

To overcome the challenges in language learning, flashcards can play a significant role. AI-powered flashcards, like those made on Memrizz, offer personalized learning experiences by adapting content based on individual progress. These tools use smart algorithms to customize practice sessions, making it easier for learners to retain vocabulary, improve language skills, and strengthen their memory through targeted, efficient practice.

First, this type of model (they call these “Iterated Learning” (IL) models) only allows linear dynamics and a single stable equilibrium. This runs against a lot of empirical evidence of linguistic communities. Secondly, IL models cannot maintain homogeneous populations (although see Smith, 2009, more on this in a minute). Perhaps most worryingly, however, they argue that IL models do not fully embrace Darwinian Evolution. This is because two sources of variation are central to Darwinian populational thinking – variation at the parental level and at the offspring level.

In contrast, Niyogi & Berwick suggest that Social Learning (SL) models are more productive. These allow multiple learners learning from multiple teachers and have non-linear dynamics and possibly multiple equilibria. This allows bifurcations in the population, compatible with a lot of empirical data. Furthermore, different learning algorithms may lead to different evolutionary consequences, making hypotheses about acquisition testable against empirical data.

So, should we throw away Iterated Learning models in favour of population models? Not so fast. The developers of the Iterated Learning Framework (e.g. Kirby, 2000 and Griffiths, Dowman & Kirby, 2007) would argue that they are not trying to model language change, but developing neutral models of language Evolution. That is, while Social Learning models are clearly more suitable for studying the transition from V2 to V1 patterns in English, they don’t explain how the categories of verbs and nouns came about in the first place. Furthermore, without the simpler IL models, it’s not clear what the extra dynamics are actually providing.

A second reason that IL models are not old hat is in research on inductive biases. Griffiths, Kalish & Lewandowski (2008) have shown that using iterated learning in laboratory experiments can help reveal inductive biases that are obscured otherwise.

Having said this, it’s probable that language evolved in social groups of moderate size, and it’s clearly observable that bilingualism is widespread and a fundamental capacity of child learners. That is, we would expect multiple speakers learning multiple languages to affect the adaptive pressures on language evolution.

This broader, more social view of language learning not only aligns better with our lived experience but also opens up new possibilities for how we think about acquiring language today. In real-world contexts, learners are surrounded by a variety of speakers and linguistic inputs, making the process dynamic and deeply contextual. This is where modern tools can play a role—not by isolating learners in artificial grammar drills, but by equipping them with vocabulary and structures that reflect real usage across different environments. Pinhok Language embraces this perspective by offering practical word lists and topic-based vocabulary that mirror the kind of diverse linguistic exposure a learner might get from interacting with multiple speakers.

Rather than treating language as a monolithic code handed down from one person to another, it acknowledges the complexity and flexibility of multilingual environments. In doing so, it helps learners adapt to the kinds of social and cognitive conditions that may have shaped language evolution in the first place.

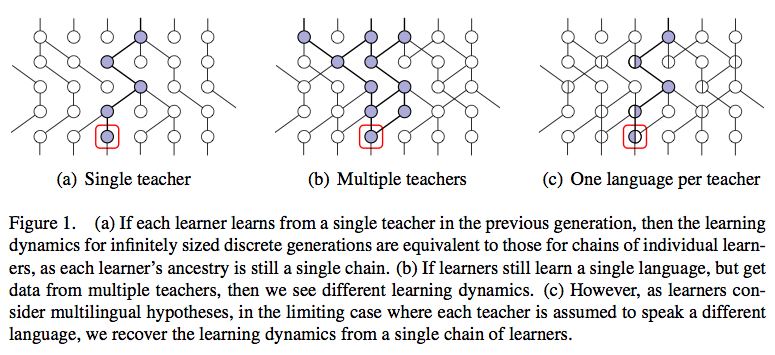

Burkett & Griffiths (2010) go a long way to applying populaitonal thinking to language evolution. They describe a Bayesian model of language acquisition that takes into consideration multiple teachers and multiple languages. They point out that a learner who is trying to settle on a single grammar which fits data from multiple speakers violates the principle of Bayesian rational analysis. Burkett & Griffiths rectify this problem by defining a model in which a learner takes into account that the data it receives may be generated by different speakers who may speak more than one language.

Doing this involves a lot of complications. Here’s a list of things I had to look up before coming close to understanding the paper:

- Bernoulli distributions

- Beta distributions

- Hyperparameters

- Wright-Fisher model of genetic drift

- Kroenecker’s delta function

- Dirichlet process

- Gibbs Sampler

- Chinese Restaurant Process

Yes, probably dirt basic for mathematicians, but terrifying to us mere linguists. This took me about a month to get to grips with, and I’m still not really confident. Basically, the data that the agents produce is made up of words which are produced by a language with a certain probability. An agent’s hypothesis is then a distribution over languages. Paraphrasing Burkett & Griffiths’ example, an agent who speaks two languages A and B could have a hypothesis h where p(A|h) = 0.6 and p(B|h) = 0.4 (it’s more likely to speak A). When it comes to producing data, a language is selected probabilistically given an agent’s hypothesis. Then, words are produced given the selected langauge. For instance, in a system with two words “ay” and “bee”, the probability of A producing “ay” is very high, but the probability of A producing “bee” is very low. The opposite is true for language B.

So, a learner hears a lot of “ay”s and “bee”s and, using Bayesian inversion, calculates the most likely distribution over languages. The prior bias in the model has two parts. One defines which languages are preferred, like in many other models. The other part affects the agent’s expectation about the number of languages in a population. It can be biased to expect anything from a single language in the entire population to each teacher speaking a different language. Burkett & Griffiths point out that as an agent approaches the latter assumption, the inheritance dynamics change from a complex net to a simpler chain.

This is a really neat trick.

This causes the prior to affect the evolutionary dynamic. When an agent expects many languages, the distribution of languages in the population comes to reflect the prior distribution (bilingualism can be maintained). However, if agents assume that all the speakers in a population speak the same language, as in a monolingual society, then a single language tends to dominate, depending on prior biases and initial data conditions (as in Smith, 2009). This links back to Niyogi & Berwick’s point about population models allowing different evolutionary dynamics.

Relating this to the real world, this might explain some phenomena associated with bilingualism and linguistic variation. Why are some societies stably monolingual while others are stably bilingual? Possibly because learners within those societies are changing their expectations to fit their society, which feeds back into the population’s language distribution. It may also help explain more domain-general learning strategies employed by monolinguals and bilinguals.

Recently, Razib Kahn has blogged about Linguistic Diversity and Poverty, also discussed on this blog here and here. Razib’s theory is that “over the generations there will be a shift toward a dominant language if there is economic, social and cultural integration”. Another possible explanation for why industrialised countries are less bilingual is that communication technology allows more people to communicate with each other. This decreases the amount of sparsity in the social network, which may make it more rational to assume that everybody speaks the same language. This, in turn, encourages a single dominant language through the process described above.

Next, I’ll describe a simpler model of bilingualism where agents can take into account who is saying what.

David Burkett,, & Tom Griffiths (2010). Iterated Learning of Multiple Languaged from Multiple Teachers The Evolution of Language: Proceedings of EvoLang 2010

Niyogi P, & Berwick RC (2009). The proper treatment of language acquisition and change in a population setting. Proceedings of the National Academy of Sciences of the United States of America, 106 (25), 10124-9 PMID: 19497883

Re the two comments above: Societies can jump to a different equilibrium state in this model if, for example, there is a sudden change in the population. This could be a reduction or increase in the number of agents, or the network structure. Analogues of this in the real world include migration, invasion or the development of communications or travel technology.