The way children learn language sets the adaptive landscape on which languages evolve. This is acknowledged by many, but there are few connections between models of language acquisition and models of language Evolution (some exceptions include Yang (2002), Yu & Smith (2007) and Chater & Christiansen (2009)).

However, the chasm between the two fields may be getting smaller, as theories are defined as models which are both more interpretable to the more technically-minded Language Evolutionists and extendible into populations and generations.

Also, strangely, models of word learning have been getting simpler over time. This may reflect a move from attributing language acquisition to specific mechanisms towards a more general cognitive explanation. I review some older models here, and a recent publication by Fazly et al.

Word Learning as Logical Inference

Word Learning as Logical Inference

Siskind (1996)model is based on set logic which focusses on the logical inferences one can make about the relations between words and meanings. The model receives sets of unordered words and meanings and tries to find connections between words and meanings which constantly co-occur. This is an example of cross-situational learning, and is the basic approach of all the following models. Siskind’s model is a complicated algorithm which sets up some basic definitions, but then needs to build in a lot of error-checking processes to cope with noise and redundancy. In the end, it never took off as an explanatory model because it was too closely-tailored to the phenomenon.

Word Learning as Machine Learning

Yu, Aslin and Ballard (2005) use machine processing techniques (Neural networks, Estimation-Maximisation, dynamic programming, clustering) and focus on how concepts are formed from real-world data. The model is incredibly complicated. It uses real data from the narrators from the experiment above. Eye tracking data is used to estimate the caregiver’s focus of attention. This is supposed to model the kind of nonspeech information a child could use to make inferences about intentions. Real-time video from a head-mounted camera is input to a feature clustering algorithm which segments the scene into objects The intentional information is also used in this process. Audio data of the caregiver’s speech is taken and automatically segmented into phonemes, then words (considering mispronunciations and phonological changes). Finally, the word-learning algorithm associates words and objects. The approach is adopted from machine translation, where the same text in two languages must be aligned. In this case, a speech stream must be aligned with a ‘meaning language’ of conceptual symbols representing visual objects.

In many ways, the model is the opposite of Siskind’s model. Siskind focussed on the mapping problem using a large corpus of artificial data. Yu et al.’s model focuses on the intentional problem using a small corpus of real data. The model is extremely impressive in the sense that it goes form realistic raw data to a mapping of words and segmented images. There are some problems, however.

First, the phonemic segmentation is initially trained on a hand-annotated phonetic corpus (TIMIT). Secondly, the model has access to all the utterances at once. In other words, there is no memory window nor any sense that the data is ordered in time. This makes the process of segmentation and inference much easier than if the categorisation had to be done on the fly.

Another problem is the stimuli. The actual story used to create the data is in the form of a repetitive rhyme. ‘I went walking’ tells the story of a boy who meets many different animals, all of whom end up following him. The structures of the utterances are predictable and the animals are presented one by one and cumulatively (first a duck, then a duck and a cow, then a duck, a cow and a pig etc.). In other words, the story is designed for explicit teaching of lexical acquisition through cross-situational learning. The majority of children’s input will not be this ideal.

Word Learning as Bayesian Inference

Frank et al. (2009) present a Bayesian model which focusses on the biases of learners trying to acquire the mappings between words and meanings of a speaker. It recognises that word learning involves two tasks: The inference of meaning and the learning of mappings between words and referents. Furthermore, once the meanings are understood, word mapping is easy.

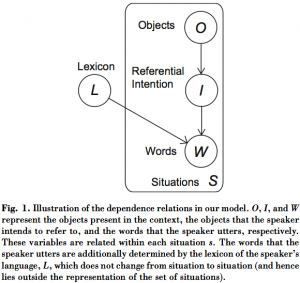

The model finds the most likely lexicon given utterances and physical contexts. Like Siskind’s model, it uses assumptions about the speaker in order to restrict the set of possible meanings. Speaker’s intentions are assumed to be a direct function of the objects in the physical environment. The utterances used by speakers is assumed to be a function of their intentions and their lexicon:

This allows conditional independence between objects and words uttered, meaning that words can be used referentially and non-referentially (e.g. function words) and can refer to things outside the immediate physical context. The code for the model is available online, and includes some implementations of other cross-situational learning models. I’ve tried to extend it to cope with bilingual input, a brief report can be found here.

The model exhibits Mutual Exclusivity behaviour. This is interesting, but it’s unclear how much the model’s definition contributes to this.

Word Learning as Parameter Setting

Yang (2002) takes a ‘variational’ approach to language acquisition. Although it’s a model of language learning rather than word-learning per-se, it fits into my ‘simplifying’ story. The initial approach is very similar to Bayesian approaches. Learners try to infer a target grammar given utterances. Each potential grammar has an associated weight. The grammars with the strongest weight represent the best current hypothesis. Given an input sentence, a grammar is chosen with a probability proportional to its weight and is applied to the sentence. If it succeeds in analysing the sentence (the sentence is grammatical), then it is rewarded by increasing its weight. If it fails to analyse the sentence, then it is punished by decreasing its weight. Over time, the distribution over hypotheses converges towards the hypothesis that is generating the input sentences. The best hypothesis emerges due to a kind of Natural Selection.

However, unlike some Bayesian approaches, there are no prior biases. The weights only reflect the statistical properties of the input. Furthermore, the model is designed to handle multiple hypotheses (bilingualism) and optionality within languages (e.g. more than one way to combine verb, subject and object). However, Yang also realises that keeping track of weights for many hypotheses is unrealistic (a hypothesis space with 30 binary parameters has 2^30 hypotheses, each with a weight). Instead, the model constructs the most probable grammar as it learns. To do this, a weight is given to each parameter (e.g. Wh-movement or V2, in a hypothesis space with 30 parameters, only 30 weights are stored now). Now, for every input sentence, a grammar is constructed by setting parameters according to the probability of their weights. Therefore, if a parameter has a high weight, it is likely to be set in a particular way. If the grammar succeeds in analysing the sentence then the weight of the parameter will go up, making it more likely to be set in the same way again.

It’s interesting to note that this is much more like a neural network solution. Parameters are input nodes which activate output nodes with a probability proportional to the weight of that parameter. The output is compared to the input string and the weights are updated. In fact, Yang highlights the problem of how to update the weights, and back-propagation (Bryson & Ho, 1969) is a possible solution. Yang suggests a simpler alternative to back-propagation which is to change all parameter weights involved in an utterance equally (either punish or reward them all). Yang shows that, for certain parameters, in the long-run the model still converges on the correct grammar.

Although connectionism seems to have died in more computational circles, it may be re-born here by using the power of Selection and Evolution to solve problems. The mode is also more transparent that most simple recurrent networks, since there is independence between nodes.

Word Learning as Machine Translation

Most recently, Fazly et al. (2010) argue that all the approaches above are computationally too expensive to be psycholinguistically realistic (a feeling I can sympathise with, having tried to extend Frank et al.’s model). First of all, many models are computationally very intensive, and involve keeping track of very many probability distributions. Secondly, many models do not process the data incrementally, but have access to all input data at once. Fazly et al. seek to rectify this by defining an incremental, probabilistic model of word-meaning associations. The model is based on general cognitive abilities and has no domain-specific biases or constraints, unlike Frank et al.’s model (There is an upper bound on the number of expected words, but is included for tractability and can be arbitrarily large without affecting the learning dynamic).

It is hypothesised that much about word-meaning associations can be inferred directly from child-directed utterances and the situation they are spoken in. The model does assume that utterances may refer to meanings and basic word segmentation and attentional mechanisms. This seems reasonable, given Yu et al.’s success at implementing such mechanisms.

The model conceptualises the problem as mapping a set of words to a set of meanings in a similar way to mapping a set of words in one language to their translation equivalents in another language. In fact, the model is based on a machine translation algorithm (Brown, 1993). The model essentially keeps track of the number of co-occurrences of a given word and meaning. However, this count is weighted at each utterance by its previous value. That is, the probability of a co-occurring word and meaning increases in proportion to the model’s confidence that they are associated. Over many sets of utterances and meanings, word-meaning pairs which consistently co-occur (and are consistently mutually absent) will gain higher association probabilities and word-meaning pairs which do not co-occur will gain low association probabilities. After processing an utterance, the model no longer has access to it.

The model can handle noise and ambiguity. This is ensured by including an invisible `dummy’ word which appears with each utterance – a sort of sublime presence (a bit spooky, really).

The model exhibits some word-learning phenomena: Frequent words are learned faster than infrequent ones; the rate of acquisition follows an S-curve, slow to begin, then rapid and finally slowing down again; and words presented later in the process require fewer repetitions to resolve (fast-mapping). Fazly et al. point out that this model exhibits Mutual Exclusivity behaviour. However, in their model, this behaviour is a consequence of the general problem-solving approach, rather than a driving bias (as in Frank et al.).

It would be interesting to think about how much this process resembled Natural Selection, and how it would fit into an Evolving population. This algorithm may also be simple enough to imagine how it Evolved. Its requirements are increased memory capacity and some simple processing, rather than domain-specific constraints.

The work above represents a gradual reaching-out by the Language Acquisition community towards the techniques and models of Language Evolution folks. Let’s hope there’s a prosperous era of co-operation ahead of us!

Nick Chater, & Morten H. Christiansen (2010). Language Acquisition Meets Language Evolution Cognitive Science

Fazly, A., Alishahi, A., & Stevenson, S. (2010). A Probabilistic Computational Model of Cross-Situational Word Learning Cognitive Science, 34 (6), 1017-1063 DOI: 10.1111/j.1551-6709.2010.01104.x

Frank MC, Goodman ND, & Tenenbaum JB (2009). Using speakers’ referential intentions to model early cross-situational word learning. Psychological science : a journal of the American Psychological Society / APS, 20 (5), 578-85 PMID: 19389131

Siskind, J. (1996). A computational study of cross-situational techniques for learning word-to-meaning mappings Cognition, 61 (1-2), 39-91 DOI: 10.1016/S0010-0277(96)00728-7

Yang (2002). Knowledge and Learning in Natural Language. Oxford University Press.

Yu, C., Ballard, D., & Aslin, R. (2005). The Role of Embodied Intention in Early Lexical Acquisition Cognitive Science, 29 (6), 961-1005 DOI: 10.1207/s15516709cog0000_40

Have you looked at Niyogi’s ? I started reading it last year but didn’t get much passed the first chapter.

Great point- I’ll look it up!

Regarding the coming together of Language Acquisition and Language Evolution from the perspective of Applied Linguistics, there seem to be at least two strands I know of: Vygotskian sociocultural theorists such as James Lantolf who eschew second language acquisition in favour of development and have recommended adopting Tomasello’s construct theory of language in favour of traditional computational models (Lantolf 2006 OUP). Then there are complex dynamic theorists, who also like Tomasello (Larsen-Freeman 2008 OUP). Larsen-Freeman even has a chapter on language evolution in her book on Complex Systems. It is an interesting time and I hope to dedicate my masters thesis to bringing a bit of language evolution into Applied Linguistics.

Jonathan – Thanks for the references, I’ll look them up. What is the topic of your masters thesis? I’d be interested in hearing about it.

Hi Sean – I’ve just started the masters so I’m still in the process of figuring out what exactly to do. I’m reading a lot of construction-based, frequentist and language evolution theory at the moment. The overall goal is to bring language evolution into applied linguistics as much as possible, or bridge the acquisition and evolution communities as you put it. My uni is particularly strong in corpus linguistics so maybe a recent forward pointing diachronic study of changes in English, Portuguese, or both (I’m also interested in Bilingualism) allied to a model of second or bilingual language acquisition which is based on language evolution theory. Or something else…

I’m currently studying lexis and will pick up Yang’s book and Fazly’s article on the basis of your review. This blog is excellent.