![]() If we accept that language is not only a conveyer of cultural information, but it is itself a socially learned and culturally transmitted system, then an individual’s linguistic knowledge is the result of observing the linguistic behaviour of others. This well attested process of language acquisition is often termed Iterated Learning, and it opens up a new avenue to investigate the design features of language: that cultural, as opposed to biological, evolution is fundamental in understanding these features.

If we accept that language is not only a conveyer of cultural information, but it is itself a socially learned and culturally transmitted system, then an individual’s linguistic knowledge is the result of observing the linguistic behaviour of others. This well attested process of language acquisition is often termed Iterated Learning, and it opens up a new avenue to investigate the design features of language: that cultural, as opposed to biological, evolution is fundamental in understanding these features.

Much of the literature regarding Iterated Learning focuses on a computational modelling approach, where “the central idea behind the ILM [Iterated Learning Model] is to model directly the way language exists and persists via two forms of representation” (Kirby & Hurford, 2002, pg. 123). These two forms consist of an I-Language (the internal representation of language as a pattern of neural connections) and an E-Language (the external representation of language as sets of utterances). This cycle of continued production and induction is used to understand how the evolution of structure emerges from non-linguistic communication systems (Kirby & Hurford, 2002) and how language changes from one form into another (Niyogi & Berwick, 2007).

To briefly summarise, these models contain a single agent who is taught an initial random language (consisting of mappings between meanings and signals). The output of the agent is then used to teach the next generation, and so on. After numerous generational turnovers of teachers and observers, some of these models provide an intriguing insight into the emergence of linguistic phenomena such as compositionality and regularity (Kirby & Hurford, 2002).

A common theme running through a wide array of these Iterated Learning studies emphasises language as being a compromise between two factors: “the biases of learners, and other constraints acting on language during their transmission.” (Smith, 2009, pg. 697). What is perhaps fundamental to this view is encapsulated in the second constraint: that the transmission is a mediating force in the shaping of language. For instance, Kirby & Hurford (2002) show how the infinite expressivity found in languages is a result of the finite set of data presented during acquisition. With this transmission bottleneck restricting the amount of data presented, learners must generalise in order to learn the data, but not to the extent where the language is one signal for all possible meanings. Tempering maximal expressivity with generalisation provides an adequate explanation for recursive compositionality (see Kirby & Hurford, 2002), without appealing to the need for an intricately specified Language Acquisition Device (LAD). As Zuidema (2003) succinctly put it: “the poverty of the stimulus solves the poverty of the stimulus”.

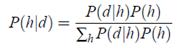

That the transmission and biases work in tandem in forcing languages to adapt suggests we cannot rely solely on cognitive explanations. To further investigate this relationship between transmission and biases, and due to criticisms concerning the bias towards a minimum description length (see Vogt, 2005a), recent models of iterated learning are frequently run with Bayesian agents (Griffiths & Kalish, 2005; Kirby & Smith, 2007; Dediu, 2009; Smith, 2009; Ferdinand & Zuidema, 2009). Essentially, by using Bayes’ theorem the role of learners is to select a hypothesis h on the basis of its posterior probability when exposed to data d:

P(d|h) provides a statistical likelihood of the data d being produced under a certain hypothesis h, with P(h) equalling the prior probability of each hypothesis. When applied to models of language and iterated learning, both hypotheses are considered to be the set of possible grammars, whilst the data consists of sets of utterances required to induce a language (Smith, 2009). Importantly, the prior probability distribution over grammars is the learning bias, which may be domain-specific or domain-general (ibid).

A critical component of Bayesian learning, and still a point of contention, largely stems from two papers (Griffiths & Kalish, 2005; Kirby, Dowman & Griffiths, 2007) investigating the role of prior biases. Griffiths & Kalish show that if agents select a grammar with a probability proportional to its posterior probability, then the stationary distribution is merely a reflection of the prior distribution (Smith, 2009). By sampling from the posterior, the agents will revert to the prior regardless of any influence the transmission vector may assert, which as Smith (2009) states: “this suggests a transparent relationship between the prior bias of learners and the observed distribution of languages in the world: the typological distribution exactly reflects the biases of learners.” (pg. 698).

Kirby et al (2007), however, demonstrate that Griffiths & Kalish’s result was because the agents were samplers as opposed to another type of hypothesis selection: maximum a posterior (MAP selection, or maximisers). Instead of sampling from the posterior, maximisers choose the hypothesis with the highest posterior probability (Ferdiand & Zuidema, 2008). As such, MAP selection offers a more muddied picture of the relationship between learner biases and typological distributions, in that the distribution of languages will “reflect the ordering of hypotheses in the prior, but differences in prior probability are magnified, such that the a priori most likely hypothesis is overrepresented in the stationary distribution.” (Smith, 2009, pg. 698). This invariably leads to a situation where different priors can result in the same stationary distribution, and by varying the transmission factors (amount of data presented, the noise between signals and meanings etc) convergence is not always towards the prior (ibid).

Iterated Learning and Population Dynamics

In the ILMs discussed, each generation consists of a single individual. This limitation breeds two further problems: 1) only vertical transmission is modelled; and, 2) there is little variability in the input. First, by negating peer-to-peer (horizontal) learning, ILMs ignore a potentially vital mechanism in language acquisition (Swarup & Gasser, 2009). For instance, Harris (1998) claims the children of immigrants tend to more readily adopt the language and accent of their home country; the basis of which being that children identify with their peers (classmates etc), rather than their parents.

Investigating horizontal transfer are several computational models (Batali, 1998; Vogt, 2005a; 2005b; Swarup & Gasser, 2009), which highlight how population dynamics play a pivotal role in accounting for the emergence of highly structured languages. Vogt (2005), for instance, uses the iterated learning model to investigate symbol grounding, which is simply the notion that “the symbol may be viewed as a structural coupling between an agent’s sensorimotor activations and its environment.” (Vogt, 2002, pg. 429). Using a discrimination games framework, in which a pair of speakers and hearers are presented with a “context of a few geometrical shapes which differ in their shapes and colors [sic]” (Swarup & Gasser, 2009, pg. 217), Vogt creates a scenario whereby a speaker selects a particular shape, and then describes it to the hearer. By comparing three aspects, based on whether the speaker’s chosen shape is revealed to the hearer before (observational game) or after (guessing game) communication, and the transmission dynamic (vertical versus horizontal), Vogt (2005a;2005b) reaches three general conclusions: 1) Single hearer-speaker dynamics in the observational condition converge on a compositional language; 2) increasing a population size to three agents results in compositionality being stable in just the guessing condition, with agents in the observational condition resulting in their compositional language being replaced by a holistic language; and, 3) the inclusion of peer learning in the model consistently produces stable compositional languages across both conditions, without the need for a transmission bottleneck.

But what about our second criticism: that the ILM fails to account for variability in the input. When acquiring a language, children are not learning from a solitary source – they are being exposed to a whole host of sources and learning environments. With this in mind, claims for increased learnability being the result of an evolutionary pressure (see Smith, 2006) are limited: “An evolutionary pressure is always manifested through variation (creation of alternatives with differing fitness) and selection (fitness-based elimination of some alternatives).” (Swarup & Gasser, 2009, pg. 217). Niyogi & Berwick (2009) pick up on such limitations, and contrast the ILM with their own Social Learning (SL) paradigm, where each individual learner is exposed to data from multiple sources within a population. Using historical data, they show that their SL model “more faithfully replicates the dynamics of the evolution of Middle English [my emphasis]” (ibid, pg. 1), which is taken as evidence that IL is deficient in two fundamental aspects: (1) it cannot explain language stability over time, and (2) its linear dynamics do not account for phase transitions (ibid).

Regardless of Niyogi & Berwick’s specific criticisms concerning language change, they do raise an important point about learning from multiple sources. Yet this point is not an inherent weakness of Iterated Learning process, as suggested by the authors, but it is instead a weakness of the single agent models. Indeed, this is position taken by three recent papers (Dediu, 2009; Smith, 2009; Ferdinand & Zuidema, 2009) which examine population dynamics in the context of Bayesian and iterated learning.

Smith (2009), for instance, looks at the implications for a Bayesian learner learning from two different sources of grammar. Called the two-grammar model, agents at each generation are exposed to data produced by multiple individuals. As already discussed, the findings of Griffiths and Kalish (2007) and Kirby et al. (2007) highlight differences resulting from single teacher-learner chains of samplers and a posteriori maximisers: to reiterate, the former converges to the prior genetic bias, while the dynamic is more complex for learners utilising the latter maximising strategy. By introducing diversity into the model, Smith’s main result is that, after cultural evolution has run its course, and regardless of whether or not agents are maximisers or samplers, each grammar “is no longer the same as the prior distribution. Rather, one language predominates, with the winning language being determined by the starting proportions of the two grammars and their prior probability.” (pg. 699).

However, as Smith concedes, the model does not really expand much beyond previous Bayesian ILMs, other than introducing multiple individuals into a vertically transmitted chain. Ferdinand & Zuidema (2009) and Dediu (2009) create relatively complex and dynamic models that not only consider population size, but population heterogeneity: agents in a population do not always have the same genetic biases. For example, Ferdinand & Zuidema introduce a great deal of variability into their model, including “the prior, hypothesis structure, bottleneck size, hypothesis choice strategy, population size, and population heterogeneity in terms of different priors per agent.” (2009, pg. 1788). After replicating the results from previous single chain, or to use their term, monadic, Bayesian ILMs (specifically, Griffiths & Kalish, 2005; Kirby, Dowman & Griffiths, 2007), their main results in polyadic (multi-agent) populations is that by just increasing the population size to 2, the “sampler model’s stationary distribution does not strictly mirror the prior.” (Ferdinand & Zuidema, 2009, pg. 1790). This is true regardless of whether the population is heterogeneous or homogeneous, but it also means the differentiation between sampler and maximiser models is not as distinct as in monadic chains. In fact, both polyadic maximisers and polyadic samplers tend to behave similarly to monadic maximisers, with heterogeneous agents’ hypotheses choices converging “as they are allowed to share more and more data, despite having fixed and different priors from each other.” (ibid, pg. 1789).

In contrast to both Ferdiand & Zuidema (2009) and Smith (2009), Dediu (2009) finds that by enlarging the population to two agents, and adding heterogeneous biases, the apparent differences between samplers and maximisers are diluted. Furthermore, in the case of chains consisting of both sample and maximiser pairs, the results tend to mirror those found in single chains of samplers: convergence towards the prior. Lastly, on the basis of a previous study (Dediu, 2008), Dediu then shows how in general, non-Bayesian heterogeneous “learners with very different genetic biases do converge on a common language.” (pg. 2).

It seems all three authors tend to converge on the somewhat diplomatic suggestion that it is too early to draw any strong conclusions concerning the interplay between biases and cultural transmission. Despite the conflicting results regarding the prior biases, with Dediu claiming agents do tend to converge towards the prior whilst Ferdiand & Zuidema (2009) and Smith (2009) find the opposite, the general consensus supports “the view that the process of cultural transmission plays a very important mediating role” (Dediu, 2009, pg. 9).

Main References

Swarup, S., & Gasser, L. (2009). The Iterated Classification Game: A New Model of the Cultural Transmission of Language Adaptive Behavior, 17 (3), 213-235 DOI: 10.1177/1059712309105818

Dediu, D. (2009). Genetic biasing through cultural transmission: Do simple Bayesian models of language evolution generalise? Journal of Theoretical Biology, 259 (3), 552-561 DOI: 10.1016/j.jtbi.2009.04.004

Kirby S, Dowman M, & Griffiths TL (2007). Innateness and culture in the evolution of language. Proceedings of the National Academy of Sciences of the United States of America, 104 (12), 5241-5 PMID: 17360393

Reali F, & Griffiths TL (2009). The evolution of frequency distributions: relating regularization to inductive biases through iterated learning. Cognition, 111 (3), 317-28 PMID: 19327759

3 thoughts on “Iterated Learning and Language Evolution”