The notion of a domain-specific, language acquisition device is something that still divides linguists. Yet, in an ongoing debate spanning at least several decades, there is still no evidence, at least to my knowledge, for the existence of a Universal Grammar. Although, you’d be forgiven for thinking that the problem was solved many years ago, especially if you were to believe the now sixteen-year old words of Massimo Piattelli-Palmarini (1994):

The extreme specificity of the language system, indeed, is a fact, not just a working hypothesis, even less a heuristically convenient postulation. Doubting that there are language-specific, innate computational capacities today is a bit like being still dubious about the very existence of molecules, in spite of the awesome progress of molecular biology.

Suffice to say, the analogy between applying scepticism of molecules and scepticism of Universal Grammar is a dud, even if it does turn out that the latter does exist. Why? Well, as stated above: we still don’t know if humans have, or for that matter, even require, an innate ability to process certain grammatical principles. The rationale for thinking that we have some innate capacity for acquiring language can be delineated into a twofold argument: first, children seem adept at rapidly learning a language, even though they aren’t exposed to all of the data; and second, cognitive science told us that our brains are massively modular, or at the very least, should entail some aspect that is domain specific to language (see FLB/FLN distinction in Hauser, Chomsky & Fitch, 2002). I think the first point has been done to death on this blog: cultural evolution can provide an alternative explanation as to how children successfully learn language (see here and here and Smith & Kirby, 2008). What I haven’t really spoken about is the mechanism behind our ability to process language, or to put it differently: how are our brains organised to process language?

Domain-general regions linked in a domain-specific manner

I’m thinking out loud here, so I’m quite happy for neuroscientists, and anyone else qualified in the matter, to tell me whether this line of inquiry is bunk. First, to clarify an important point: when I refer to language-specific processing capabilities, I’m not talking about a solitary part of the brain that is dedicated to processing all aspects of what makes up a language. Instead, almost everyone will agree that language is a distributed system, utilising separate brain regions to process different features of language within broad categories such as syntax and phonology, which, despite classical accounts into the neurobiology of language, makes use of both the left and right hemispheres. Take, for instance, this one particular account of a three stage working model for processing of emotional prosody (Schirmer & Kotz, 2006):

Sensory processing (Stage 1): Acoustic analysis is mediated by bilateral auditory processing areas. Integration (Stage 2): Processing along the auditory ‘what’ pathway integrates emotionally significant acoustic information to derive an emotional ‘gestalt’. This pathway projects from the superior temporal gyrus (STG) to the anterior superior temporal sulcus (STS) and might be lateralized to the right hemisphere (RH). Cognition (Stage 3): Emotional information derived at the level of the STS is made available for higher-order cognitive processes. For example, explicit evaluative judgments of emotional prosody are mediated by the right inferior gyrus (IFG) and orbitofrontal cortex (OFC), whereas the integration of emotional prosody into language processing recruits inferior frontal gyrus in the left hemisphere (LH).

So language processing, even at the level of individual features, is complex to say the least. Disagreement emerges in regard to the extent that language is subserved by general learning and processing mechanisms, such as memory, which are not the preserve of linguistic-specific information. The alternative being: that there is some unique component in our brain which is specific to language, with one example candidate being recursion. Now, from my own reading into the subject, I’m becoming more and more convinced by Christiansen & Chater’s (2008) explanation that language is shaped by domain-general regions:

We propose, then, to invert the perspective on language evolution, shifting the focus from the evolution of language users to the evolution of languages (see Figure 2). From this perspective, we argue that the mystery of the fit between human language acquisition and processing mechanisms and natural language may be unraveled; and we might, furthermore, understand how language attained its apparently ‘idiosyncratic’ structure. Instead of puzzling that humans can only learn a small subset of a huge set of possible languages, we take a different starting point: the observation that natural languages exist only because humans can produce, learn and process them. In order for languages to be passed on from generation to generation, they must adapt to the properties of the human learning and processing mechanisms; and the structures in each language form a highly interdependent system, rather than a collection of independent traits. We therefore propose to construe language as an organism, adapted through natural selection to fit a particular ecological niche: the human brain. Thus, the key to understanding the fit between language and the brain is understanding how language has been shaped by the brain, not the reverse. [my emphasis]

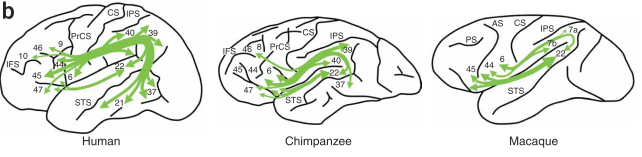

This is not to say that I think language did not provide selection pressures on these domain-general structures. In fact, I think it’s more likely that natural selection favoured networks conducive for language processing, through the selection of fibre tracts or pathways, such as the arcuate fasciculus (see below (taken from Rilling et al., 2008)), than specific mental modules dedicated to certain types of linguistic processing. The dedicated processing, then, comes from the networks themselves: they link together in a unique fashion to process a particular feature(s). Those networks that make language acquisition and processing easier will, over phylogenetic time, become more specialised toward that type of processing. To get natural selection to operate on these regions in the first place, however, requires that the discovery of this networking potential is unmasked in the first place. This is where I think the work of Terrence Deacon (2010) and Kazuo Okanoya (2007) comes in.

One example both Deacon and Okanoya relate to the evolution of language is the ability of vocal learning in songbirds. Particular emphasis is paid to the White-backed Munia: over the course of approximately 250-years, Japanese breeders bred the Munia for colouration, and eventually came up with Bengalese Finch. What’s relevant about these domesticated hybrids is in their ability to acquire songs via social learning. Conversely, the White-Backed Munia does not acquire its song via social learning; rather, it’s genetically inherited. This leads to differences in the rigidity of the songs — the Bengalese Finch display far more variability within and between individuals than their wild cousins. It appears, almost counter-intuitively, that by inhibiting the stabilising effects of natural and sexual selection for birdsong, the Bengalese Finch actually underwent behavioural complexity. This is clearly demonstrated in how the socially-acquired songs require far more forebrain nuclei and their interconnections, than the innately pre-specified song of the White-Backed Munia. As Deacon explains:

As constraints on song generation degraded with prolonged domestication, other neural systems that previously were too weak to have an influence on song structure could now have an effect. These include systems involved in motor learning, conditionally modifiable behaviors, and auditory learning. Because sensory and motor biases can be significantly affected by experience, song structure could also become increasingly subject to auditory experience and the influence of social stimuli. In this way, additional neural circuit involvement and the increased importance of social transmission in the determination of song structure can be reflections of functional dedifferentiation, and yet can also be sources of serendipitous synergistic effects as well.

By appealing to the dedifferentiation and redistribution effects of relaxed selection, Deacon argues for a tendency to shift from an innate, localised function onto a more distributed array of systems. A similar situation may have happened in human evolution: relaxed selection led to the genetic dedifferentiation of the nervous system, leading not only to the functional complexity found in human language but also contributing towards more widespread degeneration; influencing our suite of seemingly unique cognitive, social and emotional abilities. Even though developmental flexibility was probably central in opening up this new potential, natural selection probably prepared the neural circuitry for a specific type of input, which in this case is language. That is to say: at some point in our evolutionary history, those individuals with genes suited to the rapid acquisition of language were probably selected. However, the genes involved were probably those that aided chatter between seemingly disparate regions, allowing them to quickly develop their synergistic function if they received linguistic input.

To reiterate:

- Relaxed selection allowed developmental processes to open up new levels of functional complexity;

- This functional complexity was achieved through allowing additional neural systems to influence a specific type of behaviour;

- With these new possibilities now unmasked, natural selection then operated on maintaining this functional complexity by preparing individuals for linguistic input;

- One suggestion for how this might be achieved is through selection for neural circuitry that aids in creating the networks that subserve language processing;

- So instead of having domain-specific modules, humans have domain-general modules that are networked in a domain-specific manner.

- Rapidly acquired, and seemingly ubiquitous, features across languages are therefore more likely to have been the product of cultural evolutionary processes that enable a language to adapt to various constraints, including: domain-general mechanisms, the transmission vector, demography, the environment etc.

References

Deacon, T. (2010). Colloquium Paper: A role for relaxed selection in the evolution of the language capacity Proceedings of the National Academy of Sciences, 107 (Supplement_2), 9000-9006 DOI: 10.1073/pnas.0914624107

Christiansen, M., & Chater, N. (2008). Language as shaped by the brain Behavioral and Brain Sciences, 31 (05) DOI: 10.1017/S0140525X08004998

To be honest, I’m not even sure to what extent the interesting explanation you proposed would go against current coneptualizations of UG.

In his recent writings Chomsky defines UG rather weakly as the:

So his current ideas on nativism seem to be rather weak:

What is more, recent work within the framework of the Minimalist Program tries to reduce the amount of information that has to be innate as far as possible. So apart from a possible language-specific endowment and learning from experience, they try to attributes as much as they can to a ‘third factor’ which are “Principles not specific to the faculty of language” and consist of things like

So, seen in this way, the current Chomskyan approach to the question of what UG is might be compatible in principle with the approaches you discuss here.

@Michael:

These days, Chomsky himself is really playing hide-the-ball about the criterial features of linguistic nativism, and what they entail. Practicing linguists, and professionals in related fields (second language teaching, translation, language software), know what the nod to UG really ends up meaning. A good example is the enormously influential Cambridge monograph from Lydia White (2003), which is full of arguments from L2 acquisition data to language-specific principles and parameters, in the process creating a caricature of “input” and ignoring the power of frequency-sensitivity in general learning systems. Her introductory example (the “Overt Pronoun Constraint”) was, to my mind, demolished by Ariel (1990) thirteen years prior, and yet White presents the argument as essentially unobjectionable. From there, it only gets worse, a descent into a morass of details about acquisition which, allegedly, cannot be explained by facts about the linguistic environment and domain-general principles of learning. Where the rubber meets the road, this is what we’re dealing with, not some facile argument about how human children differ from kittens, and how language (as a synchronic “slice” of a single speaker, say) has some features that other systems don’t.

Just to add to the pile-on, I believe that Hauser, Chomsky & Fitch (2002) entertained the notion that recursion is the only thing we’ve got that animals don’t. That would seem to be a very minimal UG, if indeed it’s grammar at all. Recursion is computationally powerful, and computational power is what Chomsky’s old arguments are about, but it doesn’t impose much in the way of structure.

For anyone besides Michael, as he wrote this post, I suggest you go over to Shared Symbolic Storage and read the latest post on Neural reuse. Specifically, he refers to the following article: Neural Reuse: A Fundamental Organizational Principle of the Brain. It’s a fantastic paper (what I’ve read thus far) and I’m quite pleased that some of what I was saying isn’t utter nonsense.

@Bill: In regards to recursion, I agree with what you say about it not necessarily being grammar. In truth, I’m probably aligned with Dan Everett’s claims on recursion: that language doesn’t really need discrete infinity to function perfectly well for the purposes of complex communication.