If you read my last post here at Replicated Typo to the very end, you may remember that I promised to recommend a book and to return to one of the topics of this previous post. I won’t do this today, but I promise I will catch up on it in due time.

What I just did – promising something – is a nice example for one of the two functions of language which Vyvyan Evans from Bangor University distinguished in his talk on “The Human Meaning-Making Engine” yesterday at the UK Cognitive Linguistics Conference. More specifically, the act of promising is an example for the interactive function of language, which is of course closely intertwined with its symbolic function. Evans proposed two different sources for this two functions. The interactive function, he argued, arises from the human instinct for cooperation, whereas meaning arises from the interaction between the linguistic and the conceptual system. While language provides the “How” of meaning-making, the conceptual system provides the “What”. Evans used some vivid examples (e.g. this cartoon exemplifying nonverbal communication) to make clear that communication is not contingent on language. However, “language massively amplifies our communicative potential.” The linguistic system, he argued, has evolved as an executive control system for the conceptual system. While the latter is broadly comparable with that of other animals, especially great apes, the linguistic system is uniquely human. What makes it unique, however, is not the ability to refer to things in the world, which can arguably be found in other animals, as well. What is uniquely human, he argued, is the ability to symbolically refer in a sign-to-sign (word-to-word) direction rather than “just” in a sign-to-world (word-to-world) direction. Evans illustrated this “word-to-word” direction with Hans-Jörg Schmid’s (e.g. 2000; see also here) work on “shell nouns”, i.e. nouns “used in texts to refer to other passages of the text and to reify them and characterize them in certain ways.” For instance, the stuff I was talking about in the last paragraph would be an example of a shell noun.

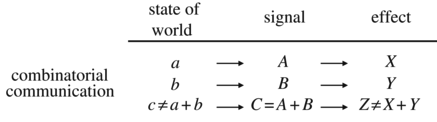

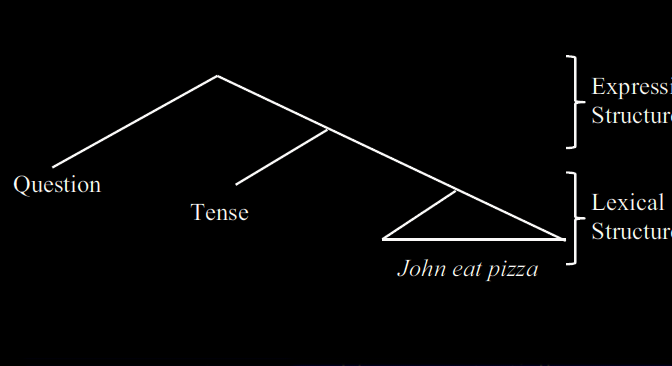

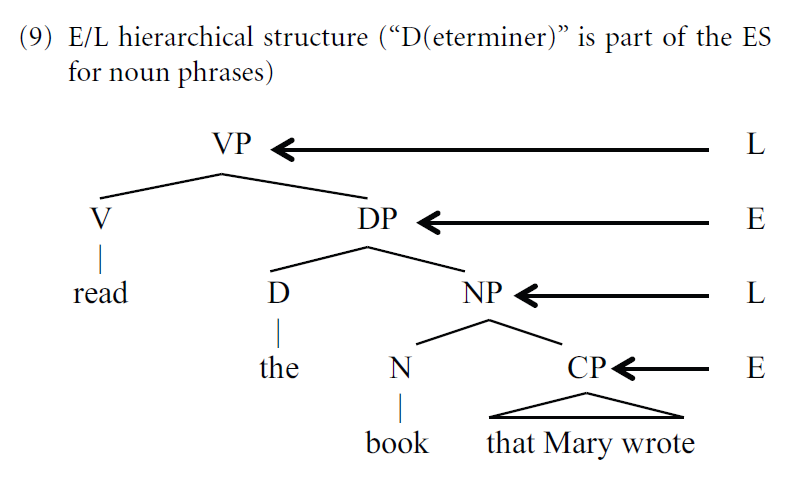

According to Evans, the “word-to-word” direction is crucial for the emergence of e.g. lexical categories and syntax, i.e. the “closed-class” system of language. Grammaticalization studies indicate that the “open-class” system of human languages is evolutionarily older than the “closed-class” system, which is comprised of grammatical constructions (in the broadest sense). However, Evans also emphasized that there is a lot of meaning even in closed-class constructions, as e.g. Adele Goldberg’s work on argument structure constructions shows: We can make sense of a sentence like “Someone somethinged something to someone” although the open-class items are left unspecified.

Constructions, he argued, index or cue simulations, i.e. re-activations of body-based states stored in cortical and subcortical brain regions. He discussed this with the example of the cognitive model for Wales: We know that Wales is a geographical entity. Furthermore, we know that “there are lots of sheep, that the Welsh play Rugby, and that they dress in a funny way.” (Sorry, James. Sorry, Sean.) Oh, and “when you’re in Wales, you shouldn’t say, It’s really nice to be in England, because you will be lynched.”

On a more serious note, the cognitive models connected to closed-class constructions, e.g. simple past -ed or progressive -ing, are of course much more abstract but can also be assumed to arise from embodied simulations (cf. e.g. Bergen 2012). But in addition to the cognitive dimension, language of course also has a social and interactive dimension drawing on the apparently instinctive drive towards cooperative behaviour. Culture (or what Tomasello calls “collective intentionality”) is contigent on this deep instinct which Levinson (2006) calls the “human interaction engine”. Evans’ “meaning-making engine” is the logical continuation of this idea.

Just like Evans’ theory of meaning (LCCM theory), his idea of the “meaning-making engine” is basically an attempt at integrating a broad variety of approaches into a coherent model. This might seem a bit eclectic at first, but it’s definitely not the worst thing to do, given that there is significant conceptual overlap between different theories which, however, tends to be blurred by terminological incongruities. Apart from Deacon’s (1997) “Symbolic Species” and Tomasello’s work on shared and joint intentionality, which he explicitly discussed, he draws on various ideas that play a key role in Cognitive Linguistics. For example, the distinction between open- and closed-class systems features prominently in Talmy’s (2000) Cognitive Semantics, as does the notion of the human conceptual system. The idea of meaning as conceptualization and embodied simulation of course goes back to the groundbreaking work of, among others, Lakoff (1987) and Langacker (1987, 1991), although empirical support for this hypothesis has been gathered only recently in the framework of experimental semantics (cf. Matlock & Winter forthc. – if you have an account at academia.edu, you can read this paper here). All in all, then, Evans’ approach might prove an important further step towards integrating Cognitive Linguistics and language evolution research, as has been proposed by Michael and James in a variety of talks and papers (see e.g. here).

Needless to say, it’s impossible to judge from a necessarily fairly sketchy conference presentation if this model qualifies as an appropriate and comprehensive account of the emergence of meaning. But it definitely looks promising and I’m looking forward to Evans’ book-length treatment of the topics he touched upon in his talk. For now, we have to content ourselves with his abstract from the conference booklet:

In his landmark work, The Symbolic Species (1997), cognitive neurobiologist Terrence Deacon argues that human intelligence was achieved by our forebears crossing what he terms the “symbolic threshold”. Language, he argues, goes beyond the communicative systems of other species by moving from indexical reference – relations between vocalisations and objects/events in the world — to symbolic reference — the ability to develop relationships between words — paving the way for syntax. But something is still missing from this picture. In this talk, I argue that symbolic reference (in Deacon’s terms), was made possible by parametric knowledge: lexical units have a type of meaning, quite schematic in nature, that is independent of the objects/entities in the world that words refer to. I sketch this notion of parametric knowledge, with detailed examples. I also consider the interactional intelligence that must have arisen in ancestral humans, paving the way for parametric knowledge to arise. And, I also consider changes to the primate brain-plan that must have co-evolved with this new type of knowledge, enabling modern Homo sapiens to become so smart.

References

Bergen, Benjamin K. (2012): Louder than Words. The New Science of How the Mind Makes Meaning. New York: Basic Books.

Deacon, Terrence W. (1997): The Symbolic Species. The Co-Evolution of Language and the Brain. New York, London: Norton.

Lakoff, George (1987): Women, Fire, and Dangerous Things. What Categories Reveal about the Mind. Chicago: The University of Chicago Press.

Langacker, Ronald W. (1987): Foundations of Cognitive Grammar. Vol. 1. Theoretical Prerequisites. Stanford: Stanford University Press.

Langacker, Ronald W. (1991): Foundations of Cognitive Grammar. Vol. 2. Descriptive Application. Stanford: Stanford University Press.

Levinson, Stephen C. (2006): On the Human “Interaction Engine”. In: Enfield, Nick J.; Levinson, Stephen C. (eds.): Roots of Human Sociality. Culture, Cognition and Interaction. Oxford: Berg, 39–69.

Matlock, Teenie & Winter, Bodo (forthc): Experimental Semantics. In: Heine, Bernd; Narrog, Heiko (eds.): The Oxford Handbook of Linguistic Analysis. 2nd ed. Oxford: Oxford University Press.

Schmid, Hans-Jörg (2000): English Abstract Nouns as Conceptual Shells. From Corpus to Cognition. Berlin, New York: De Gruyter (Topics in English Linguistics, 34).

Talmy, Leonard (2000): Toward a Cognitive Semantics. 2 vol. Cambridge, Mass: MIT Press.