In his clear and engaging plenary talk, Simon Fisher, who is director of the Department “Language & Genetics” at the Max-Planck-Institute for Psycholinguistics, the Netherlands, gave a summary of the current state of research on what molecular biology and genetics can contribute to the question of language evolution. Fisher was involved in the discovery of the (in)famous FOXP2 gene, which was found to be linked to hereditary language impairment in an English family. He has also done a lot of subsequent work on this gene, so naturally it was the also main focus of his talk.

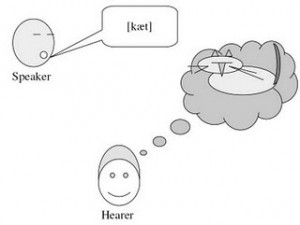

But before he dealt with this area, he dispelled what he called the ‘abstract gene myth’. According to Fisher, it cannot be stressed enough that there is no direct relation between genes and behavior and that we have to “mind the gap”, as he put it. There is a long chain of interactions and relations that stand between genes one the one side, and speech and language on the other. DNA is related to the building of proteins, which is related to the development of cells. These in turn are related to neural circuits, which then relate to the human brain as whole, which then are related to speech and language.

So when we try to look at these complex net of relations, what we can say is that there is a subset of children which grow up in normal environments but still do not develop normal language skills. From a genetic perspective it is of interest that of these children, there are cases where these impairments cannot be explained by other transparent impairments like cerebral palsy, hearing loss, etc. Moreover, there are cases in which language disorders are heritable. This suggests that there are genetic factors that play a role in some of these impairments.

The most famous example of such a case of heritable language impairment is the English KE family, where affected members of the family are missing one copy of the FOXP2 gene. These family members exhibit impaired speech development. Specifically, they have difficulty in learning and producing sequences of complex oro-facial movements that underlie speech. However, they do show deficits in a wide range of language-related skills, including spoken and written language. It thus has to be emphasized that the missing FOXP2 gene seems to affect all aspects of linguistic development. It is also important that is not accompanied by general motor dyspraxia.

In general, non-verbal deficits are not central to the disorder. Affected individuals start out with a normal non-nonverbal IQ, but then don’t keep up with their peers, something that is very likely to be related to the fact that possessing non-impaired language opens the door for the enhancement of intelligence in various ways, something which people with only one FOXP2 gene cannot take advantage of to the same degree. In general, deficits in verbal cognition are much more severe and wide-ranging than other possible impairments. It is also important to note that after the FOXP2 gene was discovered in the KE family, researchers found a dozen of cases of a damaged FOXP2 gene that led to language-related problems.

FOXP2 is a so-called transcription factor, which means that it can activate and repress other genes. As Fisher points out, in a way FOXP2 functions as a kind of ‘genetic dimmer switch’ that tunes down the expression of other genes. In this context, it should become clear that FOXP2 is not “the gene for language.” Versions of FOXP2 are found in highly similar form in vertebrae species that lack speech and language. It therefore played very ancient roles in the brain of our common ancestor. Neither is FOXP2 exclusively expressed in the brain. It is also involved in the development of the lung, the intestines and the heart. However, work by Simon Fisher and his colleagues shows that FOXP2 is important for neural connectivity. Interestingly, mice with one damaged FOXP2 copy are absolutely normal in their normal baselines motor behavior. However, they have significant deficits in what Fisher called ‘voluntary motor learning.”

From an evolutionary perspective, it is relevant that there have been very little changes in the gene over the course of vertebrae evolution. However, there seem to have been more changes to the gene since our split from the chimpanzee lineage than there have been since the split from the mouse lineage. This means that when it comes to FOXP2, the protein of a chimpanzee is actually closer to a mouse than to a human.

Overall, what current knowledge about the molecular bases of language tells us is that these uniquely human capacities build on evolutionary ancient system. However, much more work is needed to understand the influence of FOXP2 on the molecular and cellular level and how these are related to the development of neural circuits, the brain, and finally our capacity for fully-formed complex human language.